HOLD UP! 📢

The Qwen team just unleashed their new AI family, Qwen3, on the world! And guess what? They’re dropping a TON of these models as OPEN SOURCE (Apache 2.0 license)! 🎉

Meet the Crew:

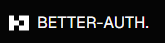

- The Big Boss: Qwen3-235B-A22B. A monster MoE (Mixture of Experts) model with 235 BILLION total params (but only activates 22B – efficiency!). They claim it throws punches with the heavyweights like DeepSeek-R1, Gemini-2.5-Pro, Grok-3, etc. 🥊

- The Scrappy MoE: Qwen3-30B-A3B. Only 3B active params but supposedly smacks down older 32B models. Lean and mean! 💪

- Dense Models Galore: Sizes from 32B all the way down to a tiny 0.6B! And even the small ones (like 4B) are apparently rivaling older 72B beasts! 🤯 Efficiency is the name of the game here.

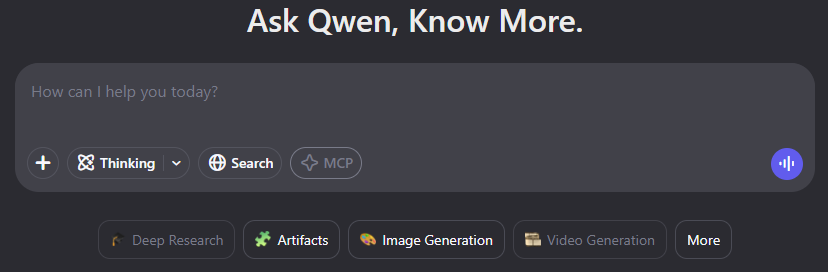

Hybrid Thinking Modes:

- 🤔 Thinking Mode: Need the AI to actually reason step-by-step on tough problems (math, code, logic)? Flip this switch ON! It takes its sweet time to ponder.

- ⚡ Non-Thinking Mode: Just need a fast answer to a simple question? Tell it NO THINKING! Get near-instant replies. BRRRR!

Importantly, you control the “thinking budget”! Use a simple enable_thinking=True/False flag in your code, or even /think and /no_think tags in chat to switch modes on the fly. Super flexible! ✅

Localization to the max! 🌍

Get this: Qwen3 supports a whopping 119 languages and dialects! Chinese, English, Spanish, Arabic, Japanese, Swahili… basically, the entire planet. 🤯

Agent Power-Up! 🤖 Code Smarter!

They’ve juiced up Qwen3’s skills for coding and acting like an agent (think tool use, planning). It plays nice with MCP (Model Context Protocol) too. They even built a Qwen-Agent library to make using these agent skills easier. Less hassle, more doing! 👍

Get Your Hands On It! 💻👇

Ready to play? These models are hitting the streets on:

Wanna run it?

- Local Fun: OLLAMA (

ollama run qwen3:30b-a3b) , LMStudio, MLX, KTransformers. - Recommended Deployment: SGLang, vLLM frameworks

- Demo: Try the

Qwen Chatweb/app! (chat.qwen.ai)

Open Source FTW! 💖 What’s Next? 🚀

Seriously, big props for open-sourcing so much under Apache 2.0! 🙏 The Qwen team is dreaming BIG AI plans (AGI/ASI vibes) and talking about future plans: more data, bigger models, longer context, more senses (modalities!), smarter agents…Buckle up! 🎢 Go Try Qwen3! 🔥