Alright, hold onto your IDEs, folks, Open AI just officially launched a research preview of Codex, a cloud-based software engineering agent designed to work on many tasks in parallel, powered by a new specialized model called codex-1.

We’re talking about an AI that can write features, answer questions about your codebase, fix bugs, and even propose pull requests, all running in its own sandboxed cloud environment. Is this the AI co-pilot evolving into a full-fledged AI crew member?

OpenAI’s Software Engineering 🤯🤖

So, here we are folks. The shift is real, AI assistants are moving from only coders expertise to proper engineers, product orchestrators!

The goal? To generate code that closely mirrors human style and PR preferences, adheres precisely to instructions, and can even iteratively run tests until it gets a passing result.

So, similarly to Windsurf’s latest SWE-1 model, Open AI is aiming to fulfil the goal of product development. (Or so it seems!) In essence, take on entire features, debug gnarly issues, or even get a pull request ready for your review. For now, Codex aims to do just that, with each task running in its own isolated cloud sandbox, preloaded with your repository.

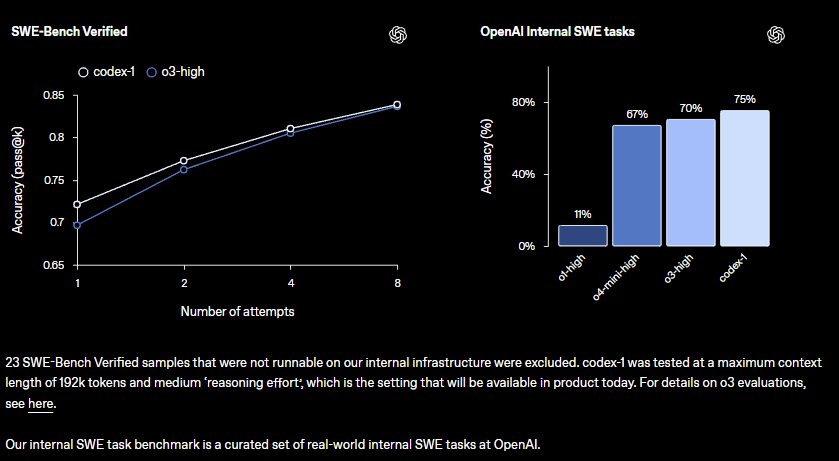

This new powerhouse is fueled by codex-1, a version of OpenAI’s o3 model that’s been specifically optimized for the art and science of software engineering. They’ve trained it using reinforcement learning on real-world coding tasks across various environments.

How Does This AI Coding Maestro Actually Work? 🎶

Accessing Codex is done right through the familiar ChatGPT sidebar. You can assign it new coding tasks by typing a prompt and hitting “Code,” or if you have questions about your codebase, you can click “Ask.”

Each task gets its own dedicated, isolated environment, already set up with your codebase. Codex isn’t just a passive observer; it can read and edit files, and run commands like test harnesses, linters, and type checkers.

And here’s a crucial part for trust and transparency: Codex provides verifiable evidence of its actions through citations of terminal logs and test outputs. This means you can trace every step it took. From there, you can review the results, ask for more revisions, open a GitHub pull request, or directly integrate the changes into your local setup.

Learning from Cursor vibe coding!

OpenAI notes that, much like human developers, Codex agents perform best when they’re provided with well-configured dev environments, reliable testing setups, and clear documentation. Ah, so proper product learning from the last year of trying to fix your cursor vibe coding with context awareness custom readme files. Nice! 👍

So, now you have AGENTS.MD files. You can place these text files within your repository to inform Codex how to best navigate your codebase, which specific commands to run for testing, and how to adhere to your project’s standard practices and coding conventions.

Symbiotic relationship wannabe!🛡️

OpenAI is launching Codex as a research preview, emphasizing their iterative deployment strategy. Smart AI governance policy! So, they’ve prioritized security and transparency, which is absolutely critical when AI starts handling more complex coding tasks independently. Users can meticulously check Codex’s work through those detailed citations, terminal logs, and test results.

So, when Codex is uncertain or hits test failures, it’s designed to explicitly communicate these issues, allowing you, the human, to make informed decisions on how to proceed. It’s a collaborative effort, and OpenAI stresses that it remains essential for users to manually review and validate all agent-generated code before integration and execution.

As a symbiotic researcher I totally dig this approach! The future is finely aligned cooperation! Which leads us to…

The shift is real: From coders to managers🚀

OpenAI acknowledges that Codex is still early. Features like image inputs for frontend work and the ability to course-correct the agent mid-task aren’t there yet. Delegating to a remote agent also takes longer than interactive editing. But the vision is clear: a future where developers drive the work they want to own and delegate the rest to highly capable AI agents.

Right now, it is rolling out to ChatGPT Pro, Enterprise, and Team users globally now, with Plus and Edu users on the “coming soon” list. There will be generous access at no additional cost for a few weeks, followed by rate-limited access and flexible pricing options.