Quick Take: Google just unleashed Gemma 3n, their fresh open model engineered to run potent, multimodal AI (audio, text, image, video) directly on user devices like phones, tablets, and laptops. It’s built on a slick new architecture for blazing speed and robust privacy, and it’s set to power the next-gen Gemini Nano. An early developer preview is live now, so you can get your hands on it.

🚀 The Crunch

⚡ TLDR: An early preview of Gemma 3n is available for developers. Start exploring its capabilities to get a head start on building next-gen on-device AI features. Focus on leveraging its current strengths in audio, text, and image processing while anticipating full multimodal input soon.

🔬 The Dive

Under the Hood:

- New Mobile-First Architecture: Co-engineered with industry leaders like Qualcomm for peak on-device efficiency and performance.

- Privacy by Design: Local processing is a core tenet, meaning sensitive user data stays on the user’s device, enhancing trust.

- Offline Capability: Empowers apps to remain functional, responsive, and reliable, even without an active internet connection.

- Foundation for Gemini Nano: The innovations in Gemma 3n directly inform and will power the next iteration of Gemini Nano, Google’s most efficient model for on-device tasks.

The Long Wall of Text

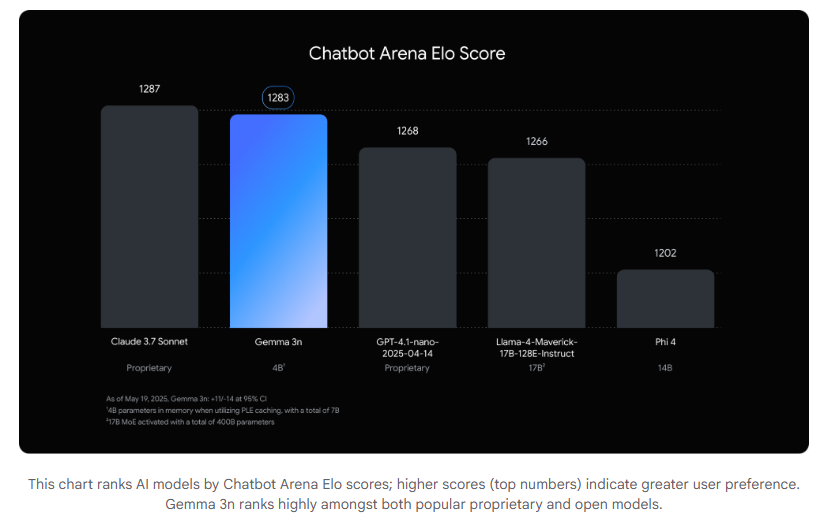

Following up on their Gemma 3 series for cloud and desktop, Google is now doubling down on bringing that AI prowess directly to the edge. Gemma 3n is their pioneering open model built on a brand-new, cutting-edge architecture, co-developed with mobile giants like Qualcomm. By tinkering with Gemma 3n now, you’re getting a sneak peek at a foundational piece of Google’s on-device AI future. And it’s already making waves: Chatbot Arena Elo scores show Gemma 3n ranking impressively against popular proprietary and other open models.

This isn’t just a standalone experiment; this advanced architecture is the bedrock for the next generation of Gemini Nano. That means the capabilities you explore in Gemma 3n today will eventually power a wide array of features in Google apps and their vast on-device ecosystem, including major platforms like Android and Chrome later this year.

Gemma 3n’s Multimodal Muscle

Engineered for speed and a minimal footprint, Gemma 3n packs serious capabilities. Its local nature means features built with it are inherently privacy-first and offline-ready, functioning reliably even without an internet connection. A key advancement is its expanded multimodal understanding, now significantly embracing audio. Gemma 3n can understand and process audio, text, and images, and offers notably enhanced video understanding. Its audio skills are a standout, enabling high-quality Automatic Speech Recognition (ASR for transcription) and Translation (speech to translated text).